K-Means Clustering

Contents

17.4. K-Means Clustering#

An initial version of the k-means algorithm was originally developed by Lloyd in 1957 but was published for the first time in 1982 [57].

Given a set of

See [29, 46, 59] for a detailed treatment of the algorithm.

17.4.1. Basic Algorithm#

K-means clustering algorithm is a hard clustering algorithm as it assigns each data point to exactly one cluster.

Algorithm 17.1 (Basic k-means clustering algorithm)

Select

Repeat

Segmentation: Form

Estimation: Recompute the centroid for each cluster.

Until the segmentation has stopped improving.

The input to an algorithm is an unlabeled dataset

where each data point belongs to the ambient feature space

Our goal is to partition the dataset into

The clustering is denoted by

where

The clustering can be conveniently represented in the form of a label array

In other words, if

Each cluster

The algorithm consists of two major steps:

Segmentation

Estimation

The goal of segmentation step is to partition the data points into clusters according to the nearest cluster centroids.

The goal of estimation step is to compute the new centroid for each cluster based on the current clustering.

We start with an initial set of centroids for each cluster.

One way to pick the initial centroids is to pick

In each iteration, we segment the data points into individual clusters by assigning a data point to the cluster corresponding to the nearest centroid.

Then, we estimate the new centroid for each cluster.

We return a labels array

We note that the basic structure of the k-means is not really an algorithm. It is more like a template of the algorithm. For a concrete implementation of the k-means algorithm, there are many aspects that need to be carefully selected.

Initialization of the centroids

Number of clusters

Choice of the distance metric

Criterion for evaluation of the quality of the clustering

Stopping criterion

17.4.1.1. Example#

Example 17.7 (A k-means example)

We show a simple simulation of k-means algorithm.

The data consists of randomly generated points organized in 4 clusters.

The algorithm is seeded with random points at the beginning.

After each iteration, the centroids are moved to a new location based on the current members of the cluster.

The animation below shows how the centroids are moving and cluster memberships are changing.

Fig. 17.6 This animation shows how the centroids move from one iteration to the next. The centroids have been initialized randomly in the first iteration.#

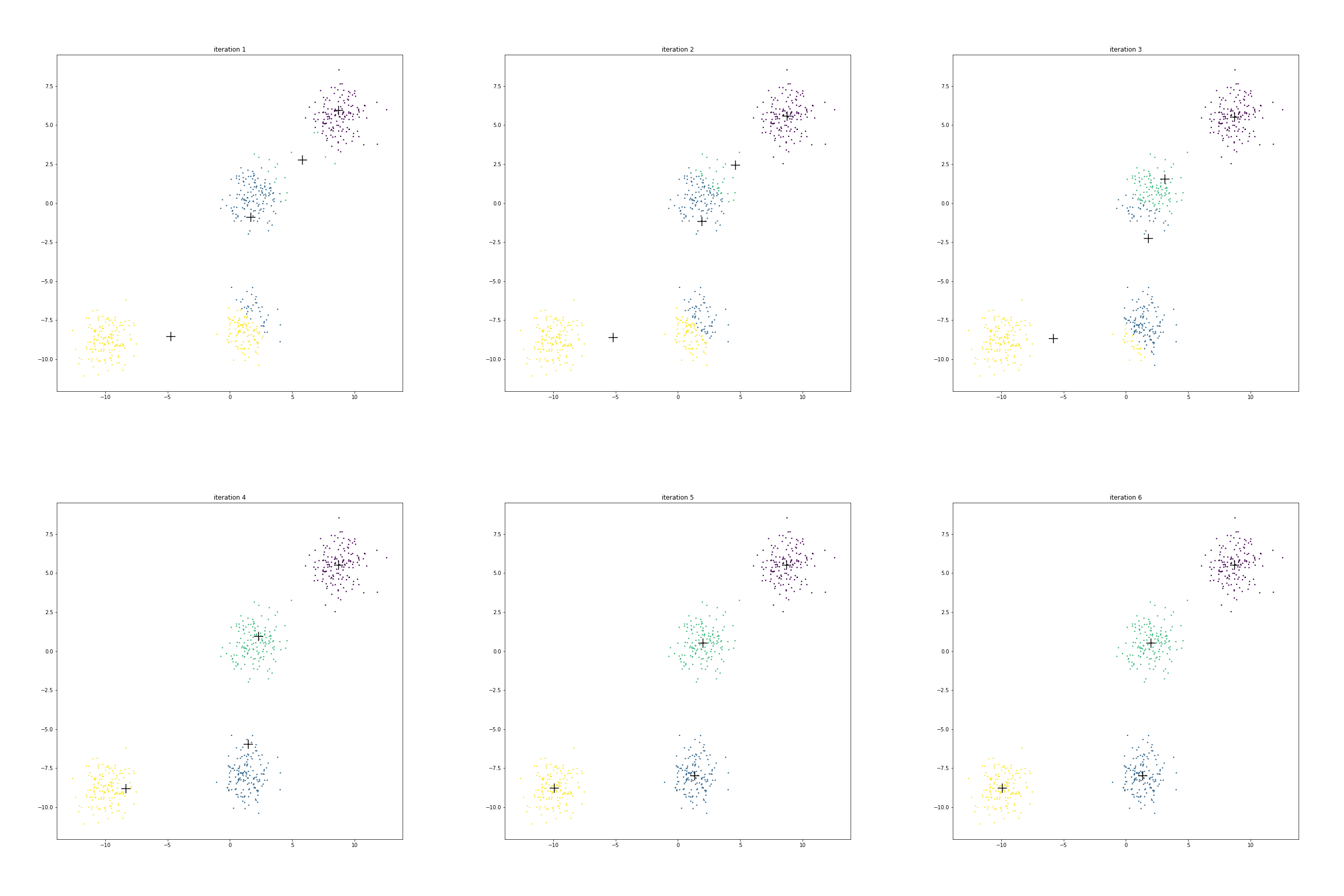

The figure below shows the clusters and centroids of all the six iterations in a grid. You can open this figure in a different tab to see its enlarged version.

We have 4 centroids randomly initialized at iteration 1. Let us call them as

A near

B near

C near

D near

Look at centroid A over time

It starts from the location

More than half of the points from the eventual blue cluster are still belonging to the yellow cluster in the first iteration.

As the centroid starts moving towards its eventual location near

Next look at the journey of centroid B.

In the beginning it contains about 70% points of the cluster around origin and only about 20% points of the cluster around

But it is enough to start pulling the centroid downward.

As the centroid C starts moving left & down, the points around origin start becoming green.

Since centroid A is also moving left, the points around

Centroid D doesn’t really move much.

Initially it is in competition with centroid C for some of its points around its boundary.

However as C starts moving to its final destination, all the points around D turn purple.

Since not many points had to change cluster membership here, hence their centroid location didn’t shift much over these iterations.

We also note that after iteration 5, there is no further change in cluster memberships. Hence the centroids stop moving.

17.4.1.2. Stopping Criterion#

As we noticed that in the previous example that centroids converge fast during the initial iterations and then they start moving very slowly. Once the segmentation stops changing, then the centroids will also stop moving. When the cluster boundaries are not clear, it is often good enough to stop the algorithm when relatively very few points are changing clusters.

17.4.1.3. Evaluation#

A simple measure for the evaluation of the k-means algorithm is the within-cluster-scatter given by

As can be seen from the formula, this is essentially the sum of squared errors w.r.t. the corresponding cluster centroids.

17.4.1.4. Convergence#

It can be shown that the scatter is monotonically decreasing over the k-means iterations.

One can easily see that there are only at most

Each partition has a different value of scatter.

Since the scatter is monotonically decreasing, hence no configuration is repeated twice.

Since the number of possible clusterings is finite, hence the algorithm must terminate eventually.

17.4.1.5. Number of Clusters#

The algorithm requires that the user provide the desired

number of clusters

This begs the question how do we select the number of clusters? Here are a few choices:

Run the algorithm on the data with different values of

Use another clustering method like EM.

Use some domain specific prior knowledge to decide the number of clusters.

17.4.1.6. Initialization#

There are a few options for selection of initial centroids:

Randomly chosen data points from the data set.

Random points in the feature space

Create a random partition of

Identify regions of space which are dense (in terms of number of data points per unit volume) and then select points from those regions as centroids.

Uniformly spaced points in the feature space.

Later, we will discuss a specific algorithm called k-means++ which carefully selects the initial centroids.

k-means algorithm doesn’t provide a globally optimal clustering for a given number of clusters. It does converge to a locally optimal solution based on the initial selection of the centroids.

One way to address this is to run the k-means algorithm multiple times with different initializations. At the end, we select the clustering with the smallest scatter.

17.4.2. Scale and Correlation#

One way to address the issue of scale variation in features and correlation among features is using the Mahalanobis distance for distance calculations. To do this, in the estimation step we shall calculate the covariance matrix for each cluster separately.

Algorithm 17.2 (k-means clustering with Mahalanobis distance)

Inputs:

The data set

Number of clusters :

Outputs:

Clusters:

Cluster labels array:

Initialization:

For each cluster

Initialize

Set

Algorithm:

If the segmentation has stopped changing: break.

Segmentation: for each data point

Find the nearest centroid via Mahalanobis distance.

Use the centroid number as the label for the point.

Assign

Estimation: for each cluster

Compute new mean/centroid for the cluster:

Compute the covariance matrix for the cluster:

Go to step 1.

A within-cluster-scatter can be defined as

This represents the average (squared) distance of each point

to the respective cluster mean. The